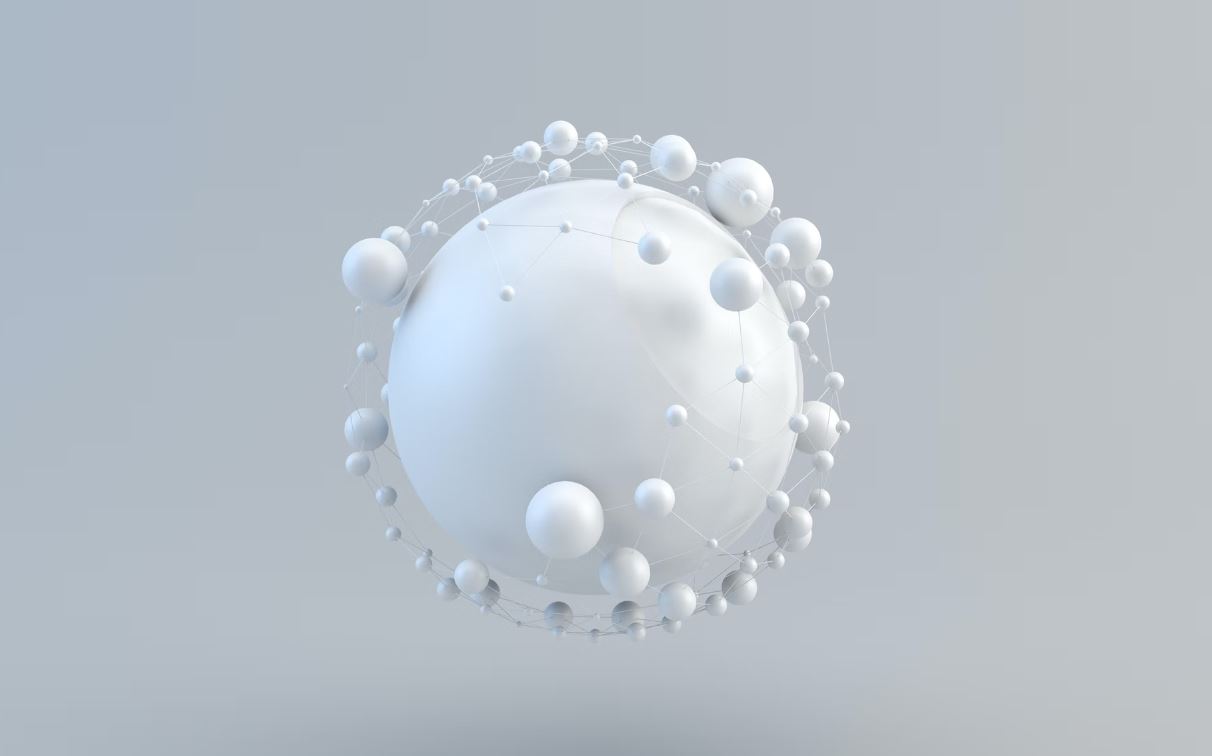

Credit: Unsplash

POINT-E assembles 3D models from text inputs.

After achieving a degree of success with their text-to-image generator DALL-E (as well as controversy, but that’s another story), AI startup OpenAI has been tinkering with other kinds of AI-powered development software. Their latest creation operates on a similar principle to DALL-E, but at the next level up: rather than flat images, POINT-E creates 3D models.

POINT-E uses an AI text input to create point clouds, 3D models composed of a multitude of colored spheres assembled into the shape of the specified object. A user just enters a prompt into the text input, and POINT-E will assemble a fully three-dimensional model in its image. As was the case when DALL-E was starting out, POINT-E’s creations vary a bit in the quality department, but the big draw is the speed at which they’re created. Compared to other point cloud generators, which can take several hours to create a detailed construct, POINT-E creates its simple models in just a few minutes, and with only one GPU.

POINT-E “leverages a large corpus of (text, image) pairs, allowing it to follow diverse and complex prompts, while our image-to-3D model is trained on a smaller dataset of (image, 3D) pairs,” OpenAI research team lead Alex Nichol wrote in a research paper about the system.

Daily Crunch: New Point-E AI allows users to generate 3D objects from detailed text prompts https://t.co/rOSX2MEEKX by @christinemhall and @Haje

— TechCrunch (@TechCrunch) December 20, 2022

Anyone interested in trying POINT-E can download its source code from GitHub. No word yet on if or when a consumer-ready version may be available.